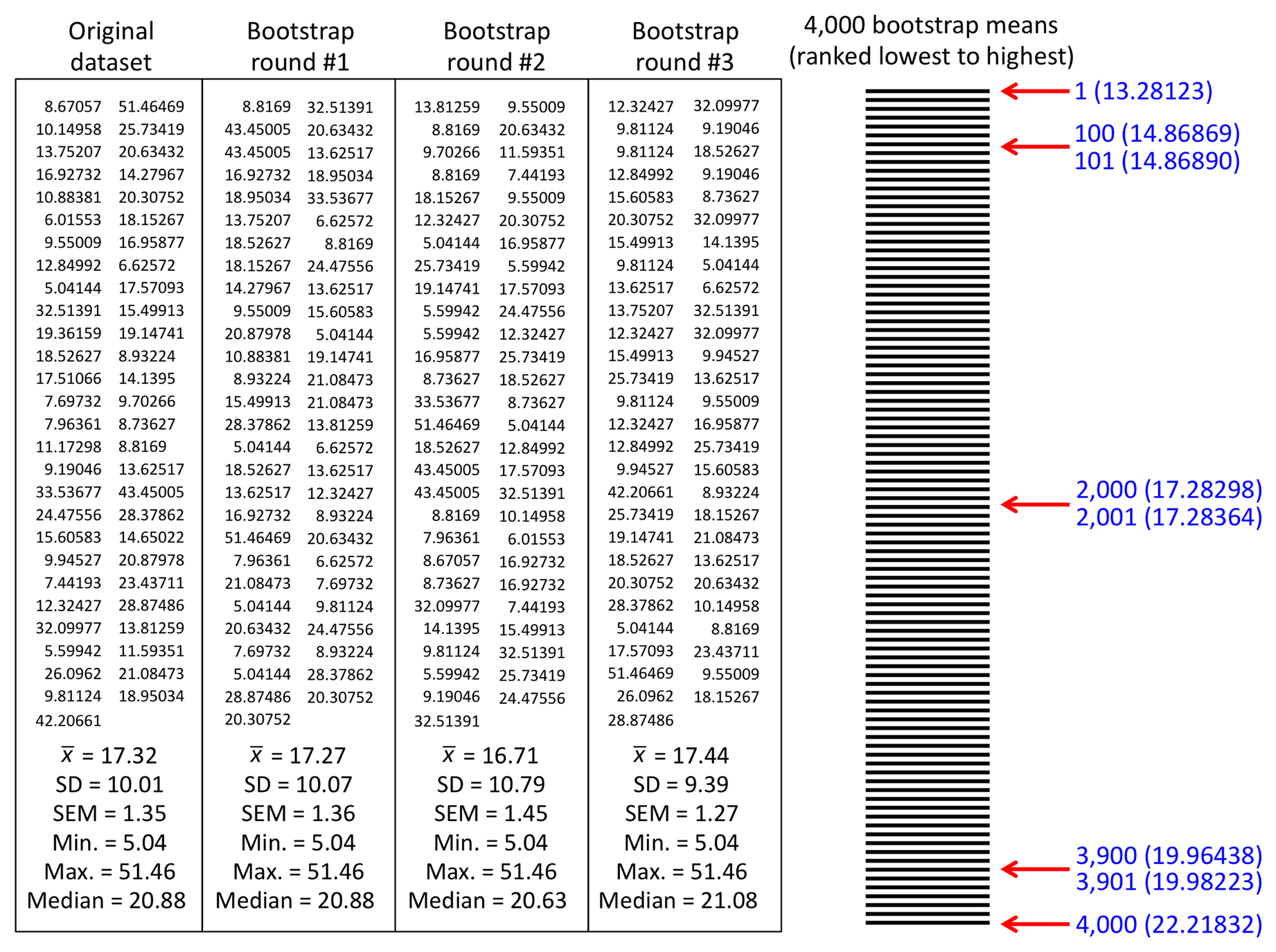

The emergence of Big Data as a recognised and sought-after technological capability is due to the following factors: the general recognition that data is omnipresent, an asset from which organisations can derive business value the efficient interconnectivity of sensors, devices, networks, services and consumers, allowing data to be transported with relative ease the emergence of middleware processing platforms, such as Hadoop, InfoSphere Streams, Accumulo, Storm, Spark, Elastic Search, …, which in general terms, empowers the developer with an ability to efficiently create distributed fault-tolerant applications that execute statistical analytics at scale. Throughout, particular attention is given to evaluation of the comparative properties of competing methods of inference. General purpose methods of inference deriving from the principle of maximum likelihood are detailed. Key elements of Bayesian and frequentist theory are described, focussing on inferential methods deriving from important special classes of parametric problem and application of principles of data reduction. Formal treatment is given of a decision-theoretic formulation of statistical inference. Focus is on description of the key elements of Bayesian, frequentist and Fisherian inference through development of the central underlying principles of statistical theory. This module develops the main approaches to statistical inference for point estimation, hypothesis testing and confidence set construction. This is done by supposing that the random variable has an assumed parametric probability distribution: the inference is performed by assessing some aspect of the parameter of the distribution. In statistical inference experimental or observational data are modelled as the observed values of random variables, to provide a framework from which inductive conclusions may be drawn about the mechanism giving rise to the data. PLEASE NOTE: The programme is substantially the same from year to year but there may be some changes to the modules listed below.Ĭore modules are offered in the Autumn and Spring terms: Autumn term core modules The programme is split between taught core and optional modules in the Autumn and Spring terms (66.67% weighting) and a research project in the Summer term (33.33% weighting). This course will equip students with a range of transferable skills, including programming, problem-solving, critical thinking, scientific writing, project work and presentation, to enable them to take on prominent roles in a wide array of employment and research sectors. The modules will focus on a wide variety of tools and techniques related to the scientific handling of data at scale, including machine learning theory, data transformation and representation, data visualisation and using analytic software.

This one-year full-time programme provides outstanding training both in theoretical and applied statistics with a focus on Data Science.

MAT support - Problem Solving Matters (AMSP).Lectures, Problems Classes and Assessment.Royal Society Entrepreneur in Residence.

Search Imperial Search Department of Mathematics Section Navigation

0 kommentar(er)

0 kommentar(er)